Lihua Xie

Failure-Aware Bimanual Teleoperation via Conservative Value Guided Assistance

Feb 01, 2026Abstract:Teleoperation of high-precision manipulation is con-strained by tight success tolerances and complex contact dy-namics, which make impending failures difficult for human operators to anticipate under partial observability. This paper proposes a value-guided, failure-aware framework for bimanual teleoperation that provides compliant haptic assistance while pre-serving continuous human authority. The framework is trained entirely from heterogeneous offline teleoperation data containing both successful and failed executions. Task feasibility is mod-eled as a conservative success score learned via Conservative Value Learning, yielding a risk-sensitive estimate that remains reliable under distribution shift. During online operation, the learned success score regulates the level of assistance, while a learned actor provides a corrective motion direction. Both are integrated through a joint-space impedance interface on the master side, yielding continuous guidance that steers the operator away from failure-prone actions without overriding intent. Experimental results on contact-rich manipulation tasks demonstrate improved task success rates and reduced operator workload compared to conventional teleoperation and shared-autonomy baselines, indicating that conservative value learning provides an effective mechanism for embedding failure awareness into bilateral teleoperation. Experimental videos are available at https://www.youtube.com/watch?v=XDTsvzEkDRE

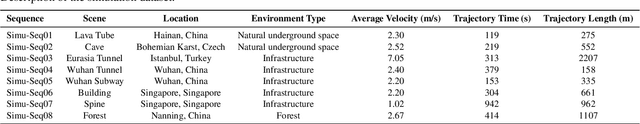

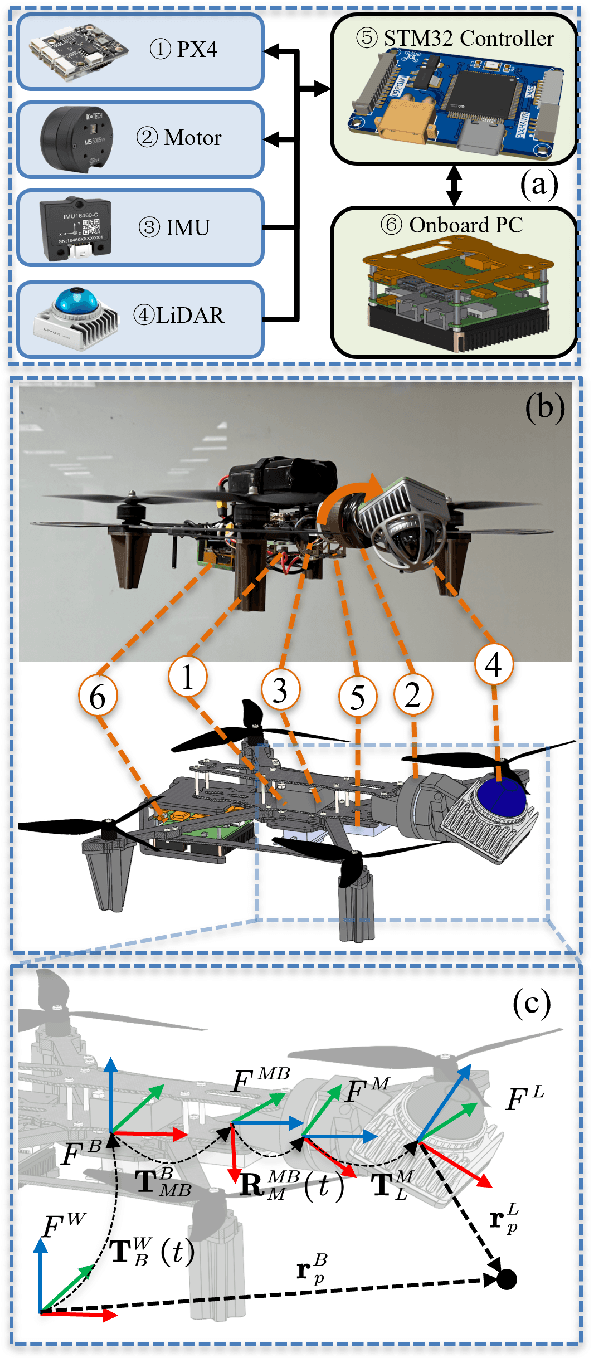

Accurate Calibration and Robust LiDAR-Inertial Odometry for Spinning Actuated LiDAR Systems

Jan 24, 2026Abstract:Accurate calibration and robust localization are fundamental for downstream tasks in spinning actuated LiDAR applications. Existing methods, however, require parameterizing extrinsic parameters based on different mounting configurations, limiting their generalizability. Additionally, spinning actuated LiDAR inevitably scans featureless regions, which complicates the balance between scanning coverage and localization robustness. To address these challenges, this letter presents a targetless LiDAR-motor calibration (LM-Calibr) on the basis of the Denavit-Hartenberg convention and an environmental adaptive LiDAR-inertial odometry (EVA-LIO). LM-Calibr supports calibration of LiDAR-motor systems with various mounting configurations. Extensive experiments demonstrate its accuracy and convergence across different scenarios, mounting angles, and initial values. Additionally, EVA-LIO adaptively selects downsample rates and map resolutions according to spatial scale. This adaptivity enables the actuator to operate at maximum speed, thereby enhancing scanning completeness while ensuring robust localization, even when LiDAR briefly scans featureless areas. The source code and hardware design are available on GitHub: \textcolor{blue}{\href{https://github.com/zijiechenrobotics/lm_calibr}{github.com/zijiechenrobotics/lm\_calibr}}. The video is available at \textcolor{blue}{\href{https://youtu.be/cZyyrkmeoSk}{youtu.be/cZyyrkmeoSk}}

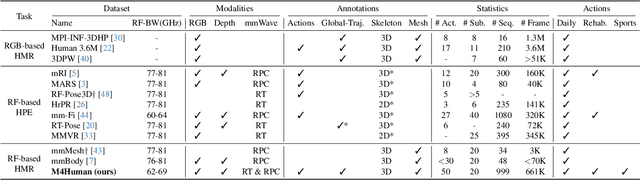

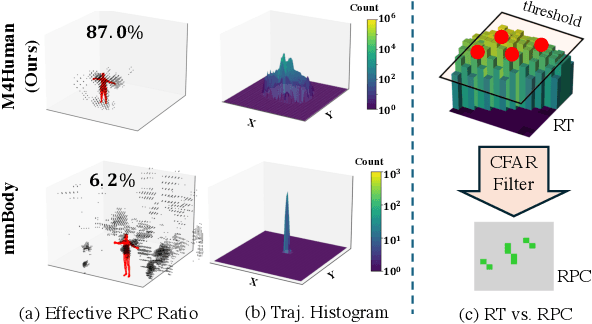

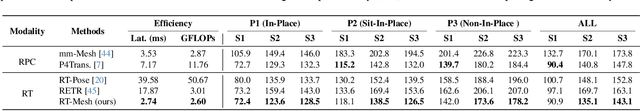

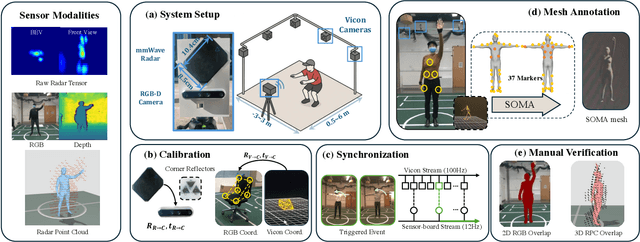

M4Human: A Large-Scale Multimodal mmWave Radar Benchmark for Human Mesh Reconstruction

Dec 17, 2025

Abstract:Human mesh reconstruction (HMR) provides direct insights into body-environment interaction, which enables various immersive applications. While existing large-scale HMR datasets rely heavily on line-of-sight RGB input, vision-based sensing is limited by occlusion, lighting variation, and privacy concerns. To overcome these limitations, recent efforts have explored radio-frequency (RF) mmWave radar for privacy-preserving indoor human sensing. However, current radar datasets are constrained by sparse skeleton labels, limited scale, and simple in-place actions. To advance the HMR research community, we introduce M4Human, the current largest-scale (661K-frame) ($9\times$ prior largest) multimodal benchmark, featuring high-resolution mmWave radar, RGB, and depth data. M4Human provides both raw radar tensors (RT) and processed radar point clouds (RPC) to enable research across different levels of RF signal granularity. M4Human includes high-quality motion capture (MoCap) annotations with 3D meshes and global trajectories, and spans 20 subjects and 50 diverse actions, including in-place, sit-in-place, and free-space sports or rehabilitation movements. We establish benchmarks on both RT and RPC modalities, as well as multimodal fusion with RGB-D modalities. Extensive results highlight the significance of M4Human for radar-based human modeling while revealing persistent challenges under fast, unconstrained motion. The dataset and code will be released after the paper publication.

Zero-Shot Open-Vocabulary Human Motion Grounding with Test-Time Training

Nov 19, 2025Abstract:Understanding complex human activities demands the ability to decompose motion into fine-grained, semantic-aligned sub-actions. This motion grounding process is crucial for behavior analysis, embodied AI and virtual reality. Yet, most existing methods rely on dense supervision with predefined action classes, which are infeasible in open-vocabulary, real-world settings. In this paper, we propose ZOMG, a zero-shot, open-vocabulary framework that segments motion sequences into semantically meaningful sub-actions without requiring any annotations or fine-tuning. Technically, ZOMG integrates (1) language semantic partition, which leverages large language models to decompose instructions into ordered sub-action units, and (2) soft masking optimization, which learns instance-specific temporal masks to focus on frames critical to sub-actions, while maintaining intra-segment continuity and enforcing inter-segment separation, all without altering the pretrained encoder. Experiments on three motion-language datasets demonstrate state-of-the-art effectiveness and efficiency of motion grounding performance, outperforming prior methods by +8.7\% mAP on HumanML3D benchmark. Meanwhile, significant improvements also exist in downstream retrieval, establishing a new paradigm for annotation-free motion understanding.

STARC: See-Through-Wall Augmented Reality Framework for Human-Robot Collaboration in Emergency Response

Sep 19, 2025Abstract:In emergency response missions, first responders must navigate cluttered indoor environments where occlusions block direct line-of-sight, concealing both life-threatening hazards and victims in need of rescue. We present STARC, a see-through AR framework for human-robot collaboration that fuses mobile-robot mapping with responder-mounted LiDAR sensing. A ground robot running LiDAR-inertial odometry performs large-area exploration and 3D human detection, while helmet- or handheld-mounted LiDAR on the responder is registered to the robot's global map via relative pose estimation. This cross-LiDAR alignment enables consistent first-person projection of detected humans and their point clouds - rendered in AR with low latency - into the responder's view. By providing real-time visualization of hidden occupants and hazards, STARC enhances situational awareness and reduces operator risk. Experiments in simulation, lab setups, and tactical field trials confirm robust pose alignment, reliable detections, and stable overlays, underscoring the potential of our system for fire-fighting, disaster relief, and other safety-critical operations. Code and design will be open-sourced upon acceptance.

Energy-Constrained Navigation for Planetary Rovers under Hybrid RTG-Solar Power

Sep 18, 2025Abstract:Future planetary exploration rovers must operate for extended durations on hybrid power inputs that combine steady radioisotope thermoelectric generator (RTG) output with variable solar photovoltaic (PV) availability. While energy-aware planning has been studied for aerial and underwater robots under battery limits, few works for ground rovers explicitly model power flow or enforce instantaneous power constraints. Classical terrain-aware planners emphasize slope or traversability, and trajectory optimization methods typically focus on geometric smoothness and dynamic feasibility, neglecting energy feasibility. We present an energy-constrained trajectory planning framework that explicitly integrates physics-based models of translational, rotational, and resistive power with baseline subsystem loads, under hybrid RTG-solar input. By incorporating both cumulative energy budgets and instantaneous power constraints into SE(2)-based polynomial trajectory optimization, the method ensures trajectories that are simultaneously smooth, dynamically feasible, and power-compliant. Simulation results on lunar-like terrain show that our planner generates trajectories with peak power within 0.55 percent of the prescribed limit, while existing methods exceed limits by over 17 percent. This demonstrates a principled and practical approach to energy-aware autonomy for long-duration planetary missions.

PERAL: Perception-Aware Motion Control for Passive LiDAR Excitation in Spherical Robots

Sep 18, 2025Abstract:Autonomous mobile robots increasingly rely on LiDAR-IMU odometry for navigation and mapping, yet horizontally mounted LiDARs such as the MID360 capture few near-ground returns, limiting terrain awareness and degrading performance in feature-scarce environments. Prior solutions - static tilt, active rotation, or high-density sensors - either sacrifice horizontal perception or incur added actuators, cost, and power. We introduce PERAL, a perception-aware motion control framework for spherical robots that achieves passive LiDAR excitation without dedicated hardware. By modeling the coupling between internal differential-drive actuation and sensor attitude, PERAL superimposes bounded, non-periodic oscillations onto nominal goal- or trajectory-tracking commands, enriching vertical scan diversity while preserving navigation accuracy. Implemented on a compact spherical robot, PERAL is validated across laboratory, corridor, and tactical environments. Experiments demonstrate up to 96 percent map completeness, a 27 percent reduction in trajectory tracking error, and robust near-ground human detection, all at lower weight, power, and cost compared with static tilt, active rotation, and fixed horizontal baselines. The design and code will be open-sourced upon acceptance.

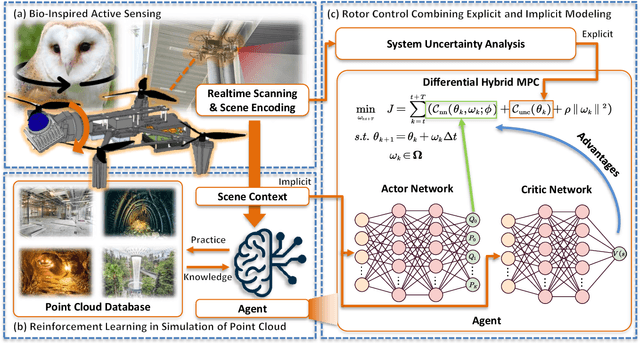

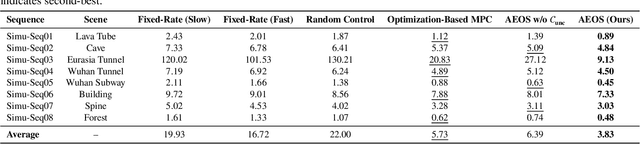

AEOS: Active Environment-aware Optimal Scanning Control for UAV LiDAR-Inertial Odometry in Complex Scenes

Sep 11, 2025

Abstract:LiDAR-based 3D perception and localization on unmanned aerial vehicles (UAVs) are fundamentally limited by the narrow field of view (FoV) of compact LiDAR sensors and the payload constraints that preclude multi-sensor configurations. Traditional motorized scanning systems with fixed-speed rotations lack scene awareness and task-level adaptability, leading to degraded odometry and mapping performance in complex, occluded environments. Inspired by the active sensing behavior of owls, we propose AEOS (Active Environment-aware Optimal Scanning), a biologically inspired and computationally efficient framework for adaptive LiDAR control in UAV-based LiDAR-Inertial Odometry (LIO). AEOS combines model predictive control (MPC) and reinforcement learning (RL) in a hybrid architecture: an analytical uncertainty model predicts future pose observability for exploitation, while a lightweight neural network learns an implicit cost map from panoramic depth representations to guide exploration. To support scalable training and generalization, we develop a point cloud-based simulation environment with real-world LiDAR maps across diverse scenes, enabling sim-to-real transfer. Extensive experiments in both simulation and real-world environments demonstrate that AEOS significantly improves odometry accuracy compared to fixed-rate, optimization-only, and fully learned baselines, while maintaining real-time performance under onboard computational constraints. The project page can be found at https://kafeiyin00.github.io/AEOS/.

Aerial Target Encirclement and Interception with Noisy Range Observations

Aug 11, 2025Abstract:This paper proposes a strategy to encircle and intercept a non-cooperative aerial point-mass moving target by leveraging noisy range measurements for state estimation. In this approach, the guardians actively ensure the observability of the target by using an anti-synchronization (AS), 3D ``vibrating string" trajectory, which enables rapid position and velocity estimation based on the Kalman filter. Additionally, a novel anti-target controller is designed for the guardians to enable adaptive transitions from encircling a protected target to encircling, intercepting, and neutralizing a hostile target, taking into consideration the input constraints of the guardians. Based on the guaranteed uniform observability, the exponentially bounded stability of the state estimation error and the convergence of the encirclement error are rigorously analyzed. Simulation results and real-world UAV experiments are presented to further validate the effectiveness of the system design.

EGS-SLAM: RGB-D Gaussian Splatting SLAM with Events

Aug 09, 2025

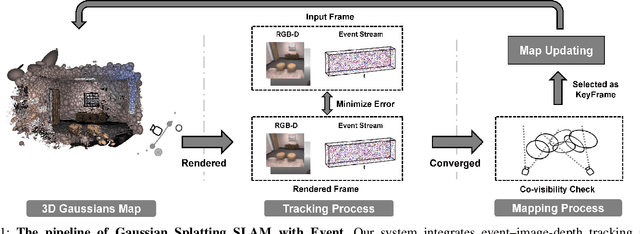

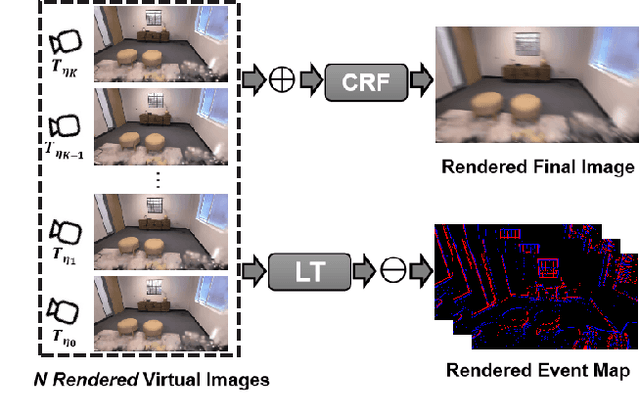

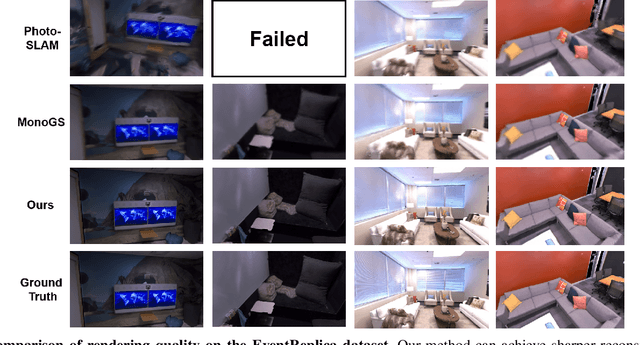

Abstract:Gaussian Splatting SLAM (GS-SLAM) offers a notable improvement over traditional SLAM methods, enabling photorealistic 3D reconstruction that conventional approaches often struggle to achieve. However, existing GS-SLAM systems perform poorly under persistent and severe motion blur commonly encountered in real-world scenarios, leading to significantly degraded tracking accuracy and compromised 3D reconstruction quality. To address this limitation, we propose EGS-SLAM, a novel GS-SLAM framework that fuses event data with RGB-D inputs to simultaneously reduce motion blur in images and compensate for the sparse and discrete nature of event streams, enabling robust tracking and high-fidelity 3D Gaussian Splatting reconstruction. Specifically, our system explicitly models the camera's continuous trajectory during exposure, supporting event- and blur-aware tracking and mapping on a unified 3D Gaussian Splatting scene. Furthermore, we introduce a learnable camera response function to align the dynamic ranges of events and images, along with a no-event loss to suppress ringing artifacts during reconstruction. We validate our approach on a new dataset comprising synthetic and real-world sequences with significant motion blur. Extensive experimental results demonstrate that EGS-SLAM consistently outperforms existing GS-SLAM systems in both trajectory accuracy and photorealistic 3D Gaussian Splatting reconstruction. The source code will be available at https://github.com/Chensiyu00/EGS-SLAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge